With the increasing demand for automation in the 21st century, robots are advancing at an unprecedented pace in numerous industries such as logistics, warehousing, manufacturing, and food distribution. Human-machine interaction, precise control and human-machine collaboration security are the cornerstones of automation adoption.

Safety refers to the multi-faceted tasks in the field of robotics, collision detection, obstacle avoidance, navigation and positioning, force detection and proximity detection are just a few examples. All of these tasks are accomplished by a suite of sensors, including LiDAR(LiDAR), imaging/vision sensors (cameras), tactile sensors, and ultrasonic sensors. With advances in machine vision technology, cameras are becoming more and more important in robots.

Sensors in the device

Charge-coupled device (CCD) and complementary metal-oxide semiconductor (CMOS) sensors are the most common types of vision sensors. A CMOS sensor is a digital device that converts the charge of each pixel into a corresponding voltage, and the sensor usually includes an amplifier, noise correction, and digital circuitry. In contrast, a CCD sensor is an analog device that contains an array of photosensitive elements.

Despite their respective advantages, with the development of CMOS technology, CMOS sensors are now widely considered to be the most suitable choice for robot machine vision, because compared with CCD sensors, CMOS sensors occupy a smaller area, lower cost, and lower power consumption.

Vision sensors can be used for motion and distance estimation, target recognition and positioning. The advantage of vision sensors is that they can collect more information at high resolution than other sensors such as LiDAR and ultrasonic sensors.

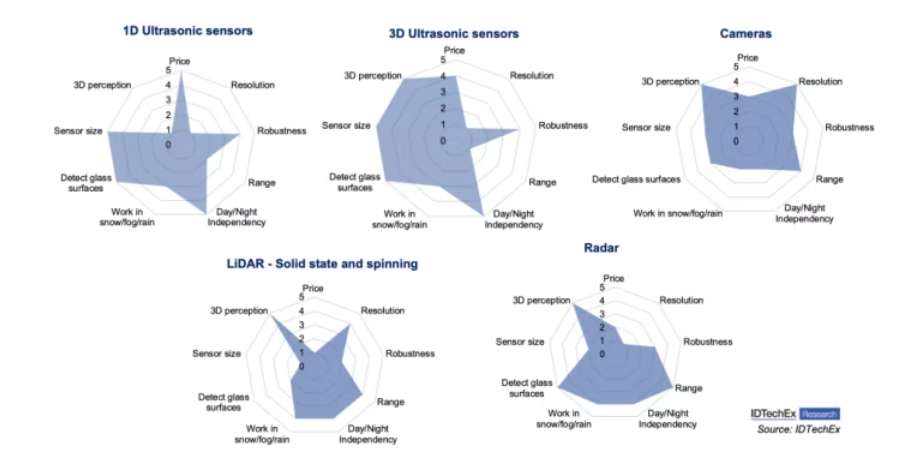

Figure 1 compares the different sensors based on nine reference points. Vision sensors have high resolution and low cost. However, they are inherently vulnerable to bad weather and light; Therefore, when robots work in environments with unpredictable weather or difficult terrain, other sensors are often needed to improve the robustness of the overall system.

Figure 1: Comparison of several sensors commonly used by robots

Vision sensor for mobile robot safety

Mobile robotics is one of the largest robotics applications where cameras are used for target classification, security and navigation. Mobile robots mainly refer to automatic guided vehicle (AGV) and autonomous mobile robot (AMR). However, autonomous mobility also plays an important role in many robots, from food delivery robots to autonomous agricultural robots (e.g., lawnmowers). As an inherently complex task, autonomous mobility requires obstacle avoidance and collision detection.

Depth estimation is one of the key steps in obstacle avoidance. This task requires single - or multi-input red, green, and blue (RGB) images collected from the vision sensor. These images are used by machine vision algorithms to reconstruct 3D point clouds and thus estimate the depth between the obstacle and the robot. At this stage in 2023, most mobile robots (e.g., AGVs, AMRs, food delivery robots, sweeping robots) are still used indoors, such as in warehouses, factories, shopping malls and restaurants with well-controlled environments with stable Internet connectivity and lighting.

As a result, cameras can perform at their best, and machine vision tasks can be performed in the cloud, greatly reducing the amount of computing power required by the robot itself, thereby reducing costs. For example, with grid-based AGVs, only cameras are needed to monitor magnetic strips or QR codes on the floor. While this is widely used and popular today, it doesn't work well for outdoor sidewalk robots or patrol robots that work in areas with limited Wi-Fi coverage (e.g., under a tree canopy).

In order to solve this problem, the technology of in-camera computer vision came into being. As the name suggests, image processing takes place entirely inside the camera. Due to the increasing demand for outdoor robots, in-camera computer vision will be increasingly needed in the long term, especially those designed to work in difficult terrain and harsh environments (e.g., exploration robots). However, in the short term, the power consumption characteristics of onboard computer vision, as well as the high cost of chips, may hinder its adoption.

Many robotics Oems (original equipment manufacturers) prefer other sensors (e.g., ultrasonic sensors, LiDAR) as the first choice to enhance the safety and robustness of their products' environmental awareness capabilities.

Improved detection range, mobility and efficiency

Conventional CMOS detectors for visible light are common in robotics and industrial image detection; However, more sophisticated image sensors hold great promise, offering performance beyond simply obtaining RGB intensity values. Significant investment is being made to develop low-cost image sensor technologies that can detect light beyond the visible range into the short-wave infrared (SWIR) range of 1,000 to 2,000nm.

Extending wavelength detection into the SWIR range brings many benefits to robotics, as it can distinguish between materials that look similar or identical in the visible light range. This greatly improves identification accuracy and paves the way for better, more practical robotic sensing.

In addition to imaging over a wider spectral range, further innovations include imaging over a larger area, acquiring spectral data at each pixel, and simultaneously improving temporal resolution and dynamic range. One promising technology in this regard is event-based vision technology.

With traditional frame-based imaging, high temporal resolution generates large amounts of data that require computationally intensive processing. Event-based vision addresses this challenge by proposing a completely new way of thinking about acquiring optical information, in which each sensor pixel reports a timestamp corresponding to a change in intensity. Event-based vision can therefore combine higher temporal resolution of rapidly changing image regions with greatly reduced data transmission and subsequent processing requirements.

Figure 2: Event-based image sensing improves processing efficiency by responding only to signal strength changes

Another promising innovation is the increasing miniaturization of image sensor technology, which makes it easier than ever to integrate it into robotic arms or components without impeding movement. This is the application targeted by the emerging market for small spectrometers. Driven by the development of smart electronic components and Internet of Things devices, low-cost miniature spectrometers are becoming more and more widely used in different fields. The complexity and functionality of standard visible light sensors can be significantly improved by integrating miniature spectrometers that can detect the spectral region from visible light to SWIR.

Heisener Electronic is a famous international One Stop Purchasing Service Provider of Electronic Components. Based on the concept of Customer-orientation and Innovation, a good process control system, professional management team, advanced inventory management technology, we can provide one-stop electronic component supporting services that Heisener is the preferred partner for all the enterprises and research institutions.